By Keith Larson

Lawrence MacNeil, VTScada Engineering Manager, discussed the various tools at the system architect’s disposal to ensure optimal SCADA system performance—even for very demanding applications.

As cheaper sensors and I/O proliferate in industrial systems, they represent increasingly ambitious projects in both scope and scale for the supervisory control and data acquisition (SCADA) systems tasked with their management and coordination.

These “big” systems, which typically number 25,000 tags or more, require special consideration from an architectural viewpoint to ensure performance expectations are met, said Lawrence MacNeil, Trihedral Engineering Manager, in his presentation, “Thinking Big: VTScada Architecture Options,” at VTScadaFest 2023.

VTScada software itself is uniquely based on state logic, which means performance isn’t inherently limited by the number of tags but by the number of tags that are changing with time. Still, VTScada system designers have at their disposal a number of tools and architectural decisions that can help ensure that even very large applications run smoothly. In addition to the total number of I/O points, other factors to consider include the physical and logical distribution and update rates for those I/O, the number of connected devices, the number of users, data storage volume and duration, plus external data reporting needs.

“It’s not very useful to qualify a ‘large’ system simply by the number of I/O,” MacNeil explained. “It’s better to think in terms of applications that require extra design considerations for loading versus actual hard numbers.”

Common configurations and tools to deal with them

Nevertheless, some patterns do emerge, MacNeil added, describing five common system configurations:

- In-plant systems, that architecturally resemble traditional distributed control systems (DCS), with centralized I/O and one to two second update cycles.

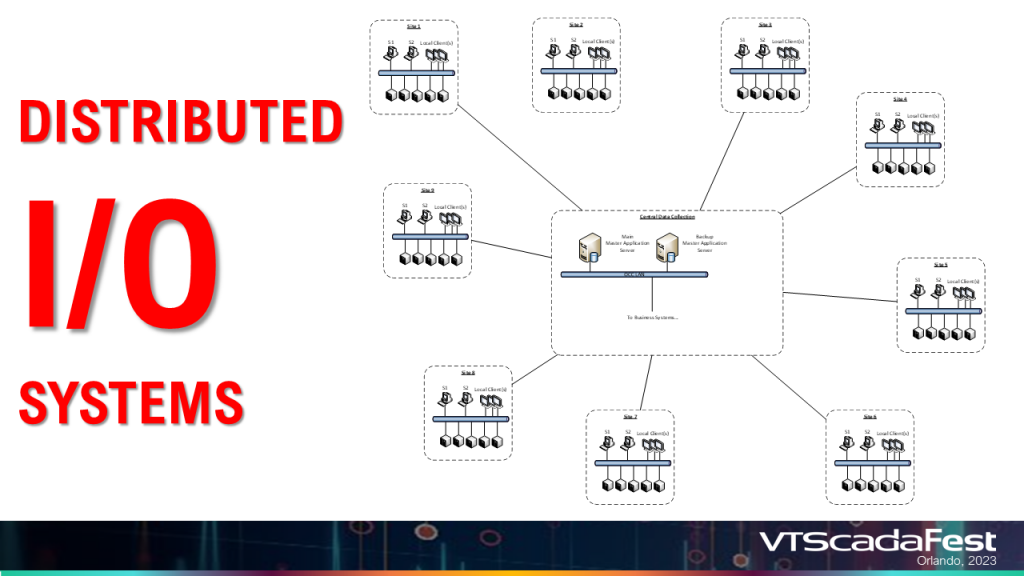

- Distributed I/O systems, in which an I/O server connects “topside” to multiple clients as well as to multiple distributed programmable logic controllers (PLCs) with associated I/O.

- Geographically distributed systems that include distributed control with local control rooms plus centralized business systems.

- Geographically distributed systems with central control that can also be operated locally.

- Multi-tenant systems that are a variation on distributed I/O systems and are commonly hosted in a cloud environment such as Microsoft Azure or Amazon Web services (AWS). “These configurations are often offered by hardware manufacturers or system integrators and may encompass multiple customer systems with data isolation,” MacNeil said.

The tools that the VTScada system architect has at his or disposal to manage and distribute the tasks associated with these different types of systems include the following:

- Load distribution, that is, distributed SCADA tasks among multiple servers.

- Data proxying, that is, passing data through a proxy client to its ultimate destination, which can reduce network traffic.

- System partitioning, which involves setting up master and subordinate systems, distributing tag structures, and using multiple historian and alarm database servers.

- Using an outward-facing DMZ, or demilitarized zone, server to provide secure “read only” access to system data.

- Scaling of server capabilities and network bandwidth.

- Software settings, such as dead-banding the sharing and logging of I/O values. (Changes in value below a certain threshold are not shared or logged.)

These tools can be used in various ways to ensure that performance demands are met on a consistent basis. For example, a simple in-plant system with one server connecting to both clients and I/O would benefit from a second server: one of which handles the clients and the other the I/O connections. “Running drivers is demanding,” MacNeil explained. In addition, one could add a third server to act as historian. “In VTScada, ‘server list’ is a powerful tool that allows distribution of services among multiple servers.”

For distributed systems, consider giving each system its own alarm database, alarm historian and system historian servers, MacNeil continued. “This can be accomplished in two ways: a single application with multiple alarm databases and historian tags or multiple applications in a master-subordinate configuration.” Partitioning a single application yields essentially the same result as a master-subordinate configuration.

Single app or master-subordinate?

One of the more subtle decisions for dealing with large systems is whether to use multiple SCADA applications configured in a master-subordinate relationship, or a single application with tasks spread across multiple servers.

The master-subordinate configuration typically works well for distributed applications across multiple facilities, multiple individual control centers with no interactions, and multiple application maintenance teams, MacNeil said. “Other pros in favor of this approach are that the system is partitioned by default; no filtering or tag start control are needed; complex server lists are easier to maintain; and each application creates its own alarm database and application historian by default.”

On the con side, it’s more difficult to maintain security with a master-subordinate configuration as all subordinate apps must use common security settings; tag types must exist in an OEM layer shared by all subordinate apps and the master app; plus, this configuration will entail higher memory overhead than a single application.

A single application works well when a single physical facility is involved, information is shared by most users, and a single team is responsible for maintaining the SCADA application. “Other pros in favor of a single application are that it can be easier to manage security, no shared OEM layer is required and one compressed ChangeSet file contains the entire application—although it can get large,” MacNeil said. On the con side, system partitioning for local HMIs requires extra set up, information for all system components (e.g., IP addresses of PLCs) can be exposed, and server lists can quickly get complex in large systems.

When choosing server hardware, MacNeil recommended high clock speeds and lower numbers of cores over lower clock speeds and more cores. “Lots of RAM is good idea, plus solid-state drives make a dramatic difference.” More detailed recommendations are available at: http://www.vtscada.com/scada-system-requirements

Networking considerations must take into account requirements that can vary widely depending on desired data throughput, MacNeil said. “Bandwidth estimates should be done as part of the design,” he added. “We can help you with this.”

In summary, MacNeil recommended the following steps to make your next large VTScada project successfully meets expectations:

- Distribute the processing load wherever possible using additional servers.

- Break the task down into smaller pieces with master-subordinate servers or multiple alarm databases and historians.

- Don’t do twice (or three times) what only needs to be done once with proxying.

- Reduce noise with dead-banding.

- Avoid non-parameterized expressions.

- Server hardware matters, as does network capacity.

Find additional information on Understanding VTScada Application Architecture.

See VTScadaFest Highlights

Author: Keith Larson – Keith Larson is group publisher responsible for Endeavor Business Media’s Industrial Processing group, including Automation World, Chemical Processing, Control, Control Design, Food Processing, Pharma Manufacturing, Plastics Machinery & Manufacturing, Processing and The Journal.

Originally Published: Optimization levers for large SCADA systems | Control Global